Artificial intelligence (AI) has expanded to various fields, and the industrial area is no exception.

In maintenance, AI has begun to automate processes and can improve the early detection of problems that cause unavailability in critical machinery, especially in energy generation and gas transportation.

Applications of AI in maintenance include:

- Risk assessment

- Early diagnosis

- Forecasting the consequences of failure

- Recommending treatments based on previous experiences

On the other hand, machine learning or ML, a subset of AI that allows computers to learn from data, has been effective in predicting other outcomes in various areas. For example, AI and ML have demonstrated greater accuracy and agility in predicting outcomes in the medical area than doctors. The early detection of cancer is a controversial example.

In the industrial area, these technologies also have the potential to improve diagnosis and the quality of recommendations or detection of potential future scenarios.

Although the experiences of these next-generation tools are little known and very few have been published, mainly due to the halo of secrecy surrounding innovative services, which protects them from their competitors, this article analyzes the application of AI and ML algorithms in the prediction of turbomachinery oil degradation, including its current applications, limitations, and future perspectives.

Oil Degradation: A Race Against Time

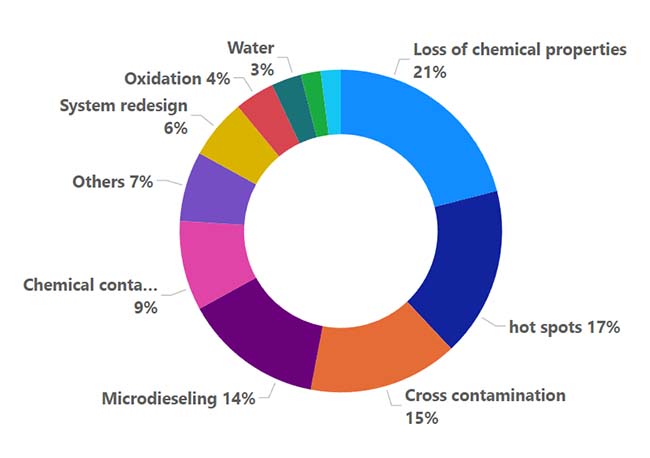

Premature oil degradation is among the three main causes of unplanned shutdowns and the unavailability of turbines and compressors. Although advances have been made in the design, diagnosis, and treatment of this type of oil, early identification of problems remains a major challenge.

Degradation, which in some cases can lead to the formation of varnish or lacquer, has been characterized by a high incidence, especially because of the introduction of base groups, the increase in operating temperatures, and the reduction in the size of lubrication systems in turbomachinery.

According to a study carried out on a database in 68 combined cycles1, 68% of gas turbines have suffered lubricant degradation problems at some point, while 84% of the oils in electrohydraulic systems have had degradation problems.

On the other hand, depending on the source consulted, the consumption of cleaning agents has had an unusual and little expected rebound in the last five years.

Table 1

Consumption of lubrication system cleaning agents has increased significantly in the last five years.

These results indirectly show that lubricants in the power generation sector are suffering unusual degradation, either because of cycling due to instability in demand or because the lubrication process in new machines is more aggressive on the oil, or that the marketing of this type of cleaning agent has influenced the purchase and applicability to the end customer.

Despite this, lubricant degradation is nothing new and part of the life cycle. Laboratory analyses aim to detect the physicochemical changes that occur in the lubricant, and an adequate condition-based maintenance program will take these changes into account to plan timely and appropriate maintenance actions.

Natural, Artificial Learning, and Machine Learning

Human beings have been learning in specific ways for thousands of years:

- It has taken us thousands of years to learn particular skills.

- What is acquired through experience. It is what each one cultivates, defines, and controls.

- What is transmitted through culture, which is also acquired social knowledge.

Until recently, these were the only sources of learning, but with the emergence of AI, we now have another additional source of knowledge generation.

This new source is not human and, in some cases, can manage itself. Computers (or microchips) can discover knowledge through data.

One of the main characteristics of knowledge is the speed at which it is transmitted; Knowledge by evolution requires or has required much more time than knowledge by experience. At the same time, it needs more time than it is transmitted.

From this point of view, it is customary to assume that what is going to happen or is already happening in some fields is that the knowledge generated by computers will surpass the different types of natural knowledge in both speed and quantity.

In many cases, this knowledge will be a synergy between the system that constantly generates and analyzes knowledge and the human being, with characteristics that the intelligent system does not yet have.

On the other hand, algorithms are nothing new and have been with us since the beginning of mathematics applied to daily life. Algorithms, temporarily, must be developed for specific fields. A particular algorithm is needed for cancer detection, a specific one to play chess, etc.

For now, and only for now, there is no algorithm capable of being applied to various fields, making it a master algorithm. This has yet to be achieved, and that even allows human beings to be unique since we can analyze different topics simultaneously.

Something like what was described above applies to the detection of anomalies in the field of lubricant degradation, where the personal experience of the data analyst plays an important role that is usually accompanied by the knowledge transmitted by someone with greater experience, knowledge, and skills.

Additionally, an analytical sequence can be supported by a computer tool capable of, at least, storing information, either from cases or situations with which it shares some point in common.

But what is the basis on which a system can be built that, in our case, allows for the early identification of physicochemical changes that lead to potential lubricant failures?

It is probably one or a combination of some of the leading ML schools on which an application can be built and on which new applications are already being developed in other areas:

- Learning by imitating the human brain. Inspired by neuroscience, we try to emulate the brain’s circuits, the function of neurons, and the creation of new synapses. For this, work is done by giving artificial characteristics to specific algorithms, making them work like a human brain. An applied example of this type of AI is speech recognition.

- Imitate evolution. Where the algorithm is aimed at evolving, thus improving more and more. This type of ML has been mainly applied to improving the design of electronic components.

- Another branch of ML is vision learning. In this branch, you start with many hypotheses, each with a degree of uncertainty. As the visualization improves, the uncertainty of each hypothesis changes until one that has a high degree of similarity is found.

- Symbolic learning, with an approach like that of a mathematician doing data induction. Where you have a data set, hypotheses are formulated to explain those data; the hypotheses are tested with a set of data, and based on the results, the hypotheses are redefined until an adequate degree of acceptance is reached. This is how the scientific method works, but the big difference is that an entire intelligent system does this. An example of this type of ML was the discovery in 2021 of a new strain of malaria.

- The last branch of ML is inspired, above all, by psychology and analogy. Where previously stored information is recovered and compared with the case study until analogous or similar points are found that will allow possible deviations to be identified in advance.

AI Turbine Oil Diagnosis Beta Version

This article describes the first step in using generative AI under a trained LLM model.

One advantage of applying AI is that a lot of time can be saved in the testing phase. The typical real test benches, oxidation in this case, can be replaced by their virtual peers, which generate a massive amount of data in less than 2% of the time required to do it traditionally. This beta version uses precise virtual models based on known databases and case studies.

The first step is to upload the turbine operation data and the oil analysis results. This information is sent for contrast and comparison with the database, in which the failure marker(s) that the lubricant presents is analyzed. An analogy of this process is the identification of early biomarkers of potentially cancerous cells.

Then, the results are presented based on two parameters.

- The first indicates the probability that the oil shows signs of chemical changes that can subsequently be transformed into insoluble matter, commonly identified as varnishes.

- The second parameter indicates the failure rate of oils with similar characteristics and conditions that showed degradation problems at a later stage.

- A third part, still embryonic, is oriented towards maintenance actions or solutions if the previous two show signs of failure.

The following case shows the result of a turbine oil sample showing no degradation signs.

- TAN: slight increase of 14%

- MPC: 8

- The rest of the parameters without changes

Laboratory diagnosis & comments:

Sample without significant changes.

Flagged fields do not require urgent maintenance action.

Observe the trend of the oil condition and the equipment degradation.

The particle count is at a slight level of attention; check the filtration system.

Pull another sample at the set frequency.

AI Diagnosis & Comments

- Oil Failure Probability: 37%

- Oil Failure Rate: 69%

- Recommended Action: Chemical filtration

Some Initial Steps and First Conclusions

From the approach of the different ML schools, it is logical to think that some have more application to the physicochemical area. In a couple of tests carried out on a set of data, the ones that have the best results, at least at first, are analogous methods and symbolic learning.

The analytical process generates an initial basic model from which the losses of the fluid characteristics are measured, and a degree of uncertainty is generated where it can go.

In this model, comparisons can be made with hundreds of models or with those that are available. Although this analysis can be carried out by a symbiotic human-machine system where the human part can direct where the analysis should focus, remember that humans can analyze various topics simultaneously that do not necessarily have something in common.

However, the synthetic part will be in charge of carrying out the large volume of calculations and will define the possible scenario with a confidence interval. This process is similar to the statistical method of error propagation.

On the other hand, the increasingly advanced systems for detecting particles in fluids can apply calculations not only to determine the type of particle but also to correlate various hypotheses about a potential failure until defining or discarding those that do not fit your preferred model.

All this sounds good and can be achieved tomorrow, but it is not that simple. One of the significant barriers is the transmission of human knowledge to its synthetic counterpart.

It is more challenging than writing a line of code and getting it to produce the expected results or sitting specialized programmers in a room to generate a quasi-perfect problem identification model.

Fortunately for many, what makes us unique, at least for now, is that we can identify problems based on analogies and comparisons and that the synapses that run through our neuronal system are challenging to imitate.

I have been fortunate enough to see the first steps of the application of ML in the field of fluid analysis, and without a doubt, we are at the dawn of a new dawn.

It is predictable that it will take time, but each step that is being taken brings us closer to a substantial improvement aimed at improving production, reducing machinery unavailability, and improving lubricant conditions.

References

- Turbine Oil Analysis Data Analytics, Jorge Alarcon